What have DALL-E and my site in common?

TL;DR

Before publishing this post: Nothing at all.

After publishing this post: There is an article mentioning DALL-E on this site.

Hacker News - the root of all evil

There was a time when I was reading

Hacker News on regular basis but now I tend

to avoid it. Most of the time, when I was visiting it and checking all those shiny

projects and articles that people create, after a few moments of

excitement, I was ending (best case) a little melancholic about my own

achievements. So it was rather counterproductive to keep doing that. But

somehow I ended up there again last night (I was venting out some frustration

about Medium comments responses system in google search and one of the top

hits was HN).

And there it was - someone posted a link to Open AI's DALL-E. And I was lost.

DALL-E

So what is DALL-E? I will simply quote the article's introduction:

DALL·E is a 12-billion parameter version of GPT-3 trained to generate images from text descriptions, using a dataset of text–image pairs. We’ve found that it has a diverse set of capabilities, including creating anthropomorphized versions of animals and objects, combining unrelated concepts in plausible ways, rendering text, and applying transformations to existing images.

My knowledge of generative networks never reached beyond simple GANNs and style transfer (which is still better than my grasp on NLP). However, the article is only 27 minutes read and not very detailed on a technical level, so it is digestable for me. The most important thing though, are the examples of what DALL-E is capable of. It can generate:

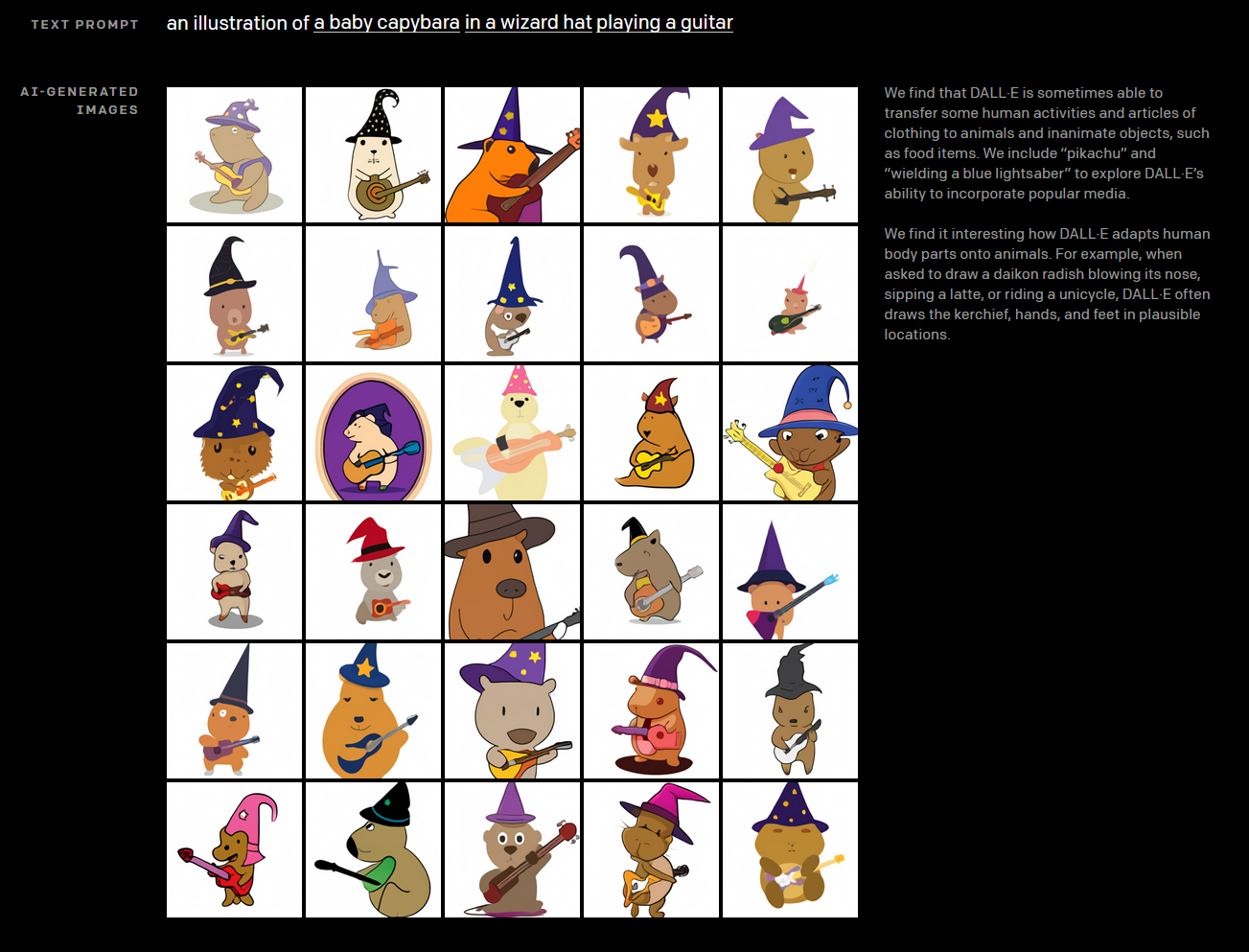

an illustration of a baby capybara in a wizard hat playing a guitar

and the results are great:

Examples of DALL-E output for prompt shown above, all credit goes to Open AI

Examples of DALL-E output for prompt shown above, all credit goes to Open AI

I mean, come on! That is impressive.

Yet again, similar to the introduction of GPT-3 architecture itself in May 2020, it makes you wonder when those models will start replacing humans (poor me as a developer - it might seem like a joke, but the fear is real). We should prompt some GPT-3 model to generate new JavaScript frontend framework every week or so.

There was a vivid discussion on HN (link here) under the article. Some clever points were made there. Some of them might give us, humans, some hope for the near future. Here are those which appealed most to me:

- Some concepts probably are much more well-defined (overrepresented) in the

training input set. And there is such possibility that those examples belong

to those classes. And, same as the model itself, the dataset is huge, so it

is not a surprise that you can find

anthropomorphized radishthere (search for大根アニメ, click). - It is a language model, so what it learns about is language (translation of written language to visual one in this case). It might not be as general as it is presented by researchers.

- The original GPT-3 model is 175 billion parameters (it is even hard to tell how Open AI deploys it for training, heck even interference seems hard). I've read some claims that this is enough to encode around a third of the input data directly. With 12 billion parameters, DALL-E is slightly thinner, but it is still big enough to be called enormous. So there are some controversies about reasoning capabilities of those transformer models. Do they reason or simply memorize the data? That would make them, very sophisticated and ML-based indeed, but only search algorithms (I know, exaggerating a little bit here ;) ).

- Black-box problem, which is becoming even more relevant with those models scaling more and more. We, humans, cannot reason about how the model works. We can't predict and explain why it made its decisions. It might work well in non-critical usages (creative work) but an unpredictable model is a no-go in many areas i.e. medicine or wealth management (sic!).

- Also, god only knows how processing-intensive training those transformers networks is, some people claim that for original GPT-3 it would be 355 years and a few million dollars. Better not dive into this topic, and not convert it to CO2 tonnes equivalent.

Nevertheless, DALL-E is still an IMPRESSIVE and TREMENDOUS work pushing both natural language processing and image generation next step further.

I could probably go on and on, and try to write something about Open AI's CLIP which was used in DALL-E's training. Or list some highlights about DALL-E, so it doesn't seem that I am a bitter man who can't admire the significance of this work (once again it is great). But it is getting late (1:36 AM) so it would be probably good for me to get some sleep.

So, what I am working on?

The whole DALL-E thing was supposed to be just a short (kinda ironic) intro from which I would move into site improvements that I am working on currently. But again, it is getting late, so I will limit myself to listing them here:

- Added reading time (Medium like) information component to posts. But it is not yet populated with the correct value.

- Addition of some CSS theme mechanism to the template, so I don't need to remember what is hex for that gray color used in posts meta info.

- Added hypothes.is sidebar to the site. Found it

accidentally during my Medium

commentsresponses rage. Seems like a very nice concept so I've decided to give it a try. - Read a little about SSR (funny how we, as industry, circled back to it) and web performance. Checked my site performance using Lighthouse. Everything would be fine, except that adding hypothes.is sidebar gives huge performance penalty.

It is not rocket science nor transformer based deep learning algorithms. But it is fun!